“It’s not enough to gather climate data”

1 October 2018, by Ute Kreis

Photo: UHH/CEN/F.Brisc

Just a few days ago, the German Research Foundation gave the green light: the Cluster of Excellence “Climate, Climatic Change, and Society” (CliCCS) will receive seven years of funding, starting in 2019. And researchers are already discussing key related aspects, e.g. in the recent workshop on “Future Climate Data Management.” We talked with CliCCS Speaker Prof. Dr. Detlef Stammer about why they want to make climate observations more accessible.

Prof. Stammer, researchers regularly publish journal articles, where their findings are reviewed, discussed and critically assessed. In addition, there are more and more Open Access portals – so why do we need this initiative?

It’s not enough to gather as much observational data as possible, if it remains on in-house computers and isolated databases. Our goal has to be preparing climate information and actively making it available to the scientific community in a high-quality format, complete with error bars and metadata. This is just as true for observational data as is it for the results of computer models or for historical climate data, which first has to be digitized.

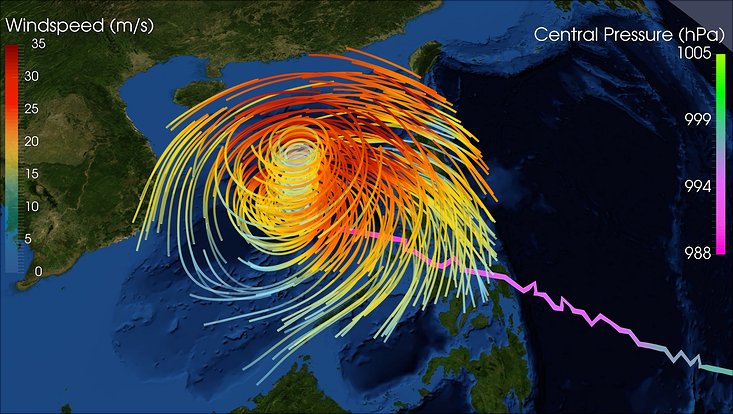

Since the 1990s there have been substantial technological strides: autonomous monitoring systems, seamless satellite coverage, integrated climate models and high-performance computers – they’ve all produced a veritable treasure trove of data. The goal now is to find that treasure, to preserve it, and to put it to best use, both today and tomorrow!

That all sounds good, so where’s the problem?

In order for researchers around the globe to be able to work with these findings, it’s important to employ binding standards and data flows – in other words, we need reliable quality control and a guaranteed supply of observational data, which can then be archived and consolidated at international data centers. The data formats used must be uniform and suitable for further use. In addition, every data point has to be supplemented with metadata: on when and where it was measured, by whom, how it has been processed to date, etc.

The representatives of national and international climate research and climate data centers have to work together to find solutions to these problems. The recent workshop was just the first step; ultimately we could see a “national climate data center” that closely collaborates with international organizations like the World Climate Research Programme, the World Meteorological Organization’s Global Climate Observing System, and the Intergovernmental Oceanographic Commission.

What will all this cost?

Of course, this all requires financial backing, and those funds need to be used sensibly. To do so, we first need to comprehensively assess the status quo, to find out which data is where, in which format, and where there are still gaps. For example, a wealth of important historical data is still only available on paper in the archives of federal offices and authorities, and still needs to be made accessible.

That sounds like quite a bit of work. Is it worth it?

The initiative is indispensable. Much of what we know today, we would have never discovered without the aid of systematic long-term data collections and exchanges with other centers at the national and international level. In this regard, Hamburg’s Integrated Climate Data Center (ICDC) has made valuable inroads over the past ten years. Ultimately, of course, managing data from around the globe isn’t the responsibility of a single institution or nation; internationally, all of the relevant players have to work hand in hand.